Introduction to Central Processing Units (CPUs)

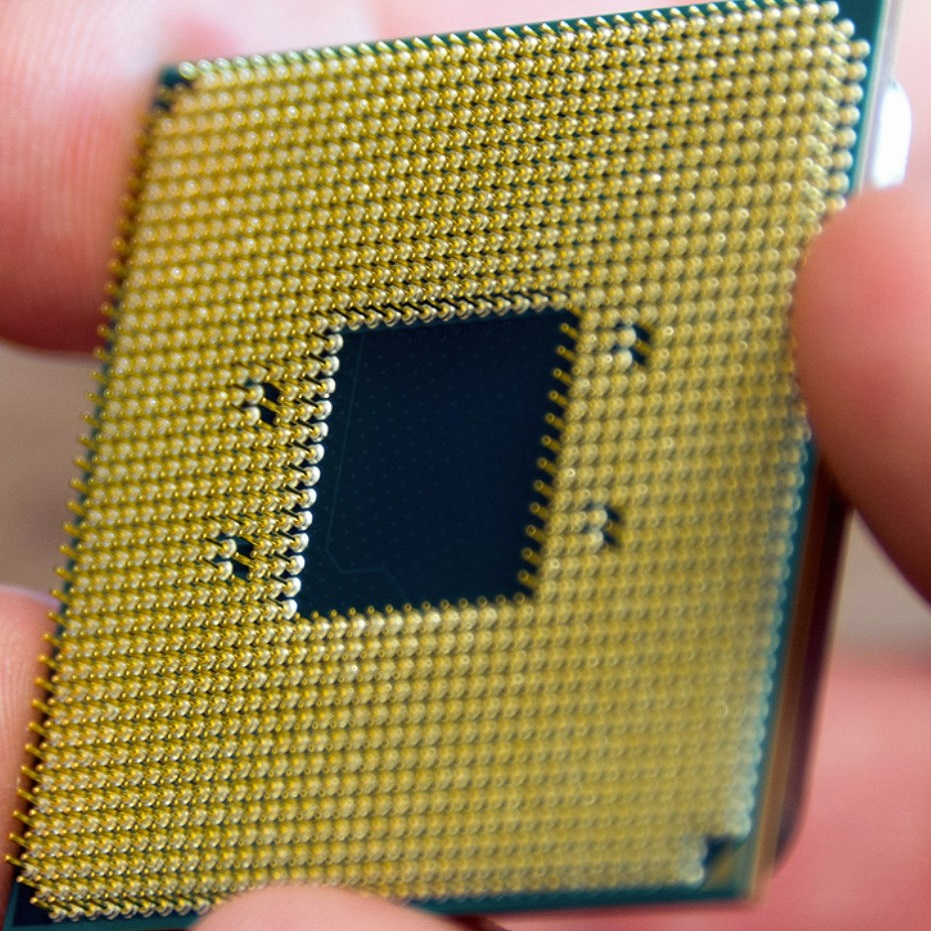

At the heart of every computer lies the Central Processing Unit (CPU), often termed the computer’s brain. The CPU function is the primary component responsible for interpreting and executing most of the commands from the computer’s other hardware and software. All the tasks that we associate with computing, such as running applications, executing calculations, and processing data, are fundamentally carried out by the CPU.

A CPU’s performance greatly influences the overall speed and efficiency of a computer system. It affects how quickly programs can run, how many tasks can be handled at once, and the computer’s ability to process complex instructions. Modern CPUs aren’t just singular units; they often consist of multiple cores, each capable of processing instructions independently, which enhances the computer’s multitasking abilities.

To truly appreciate the capabilities and complexity of modern CPUs, we must dissect the unit and understand its components and functions. In the following sections of this blog, we will explore the key elements that make up a CPU, delve into its core functions, examine the enhancements that boost CPU performance, and demystify some of the terminology associated with it.

By gaining a better understanding of CPUs, we can appreciate not only the incredible technological progress from early computation devices but also how critical the CPU is in determining a computer’s performance. Whether it’s powering a lightweight tablet or a robust server system, the CPU remains a cornerstone of modern computing.

Core Functions of the CPU

A CPU handles many tasks to ensure a computer runs smoothly.

Fetching Instructions

Fetching is where the CPU starts instructions. It retrieves them from memory to process.

Decoding Instructions

Next, the CPU decodes instructions. This means it translates them into actions it understands.

Executing Instructions

After decoding, the CPU executes instructions. This involves doing the actual computing work.

Managing Program Flow

The CPU also manages how programs run. It decides the order and the process flow.

Handling Interrupts

Handling interruptions is key too. The CPU addresses sudden needs from hardware or software.

Managing Caches

Lastly, the CPU manages caches. Caches keep important data handy for quick access.

CPU Performance Enhancements

To maximize their efficiency and power, CPUs incorporate various performance enhancements. These improvements facilitate more fluid multitasking and faster processing of complex tasks.

Virtual Memory Management

Virtual memory management allows a system to use more memory than physically available. The CPU collaborates with the operating system to manage this process. It involves complex tasks like memory addressing, managing page tables, and swapping data between RAM and disk storage. This process ensures applications have enough memory to run efficiently, even when the physical memory is limited.

I/O Operations

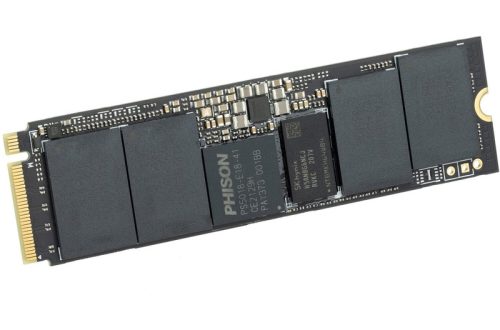

Input/Output (I/O) operations are how a CPU communicates with other devices, such as keyboards and hard drives. The CPU coordinates these data transfers, ensuring that information flows smoothly between the computer’s internal components and external devices. It’s essential for running your programs and accessing your files.

Interrupt Handling

Interrupts alert the CPU to immediate issues that require attention, like a new email notification or an error from a running application. The CPU responds by temporarily halting the current task, saving its state, and handling the interrupt. This could involve starting a different operation or addressing an error before resuming the interrupted task. It’s vital for maintaining a system’s responsiveness and stability.

Advanced CPU Features

Modern CPUs are designed with advanced features that go beyond basic data processing.

Multitasking and Multiprocessing

Multitasking allows a CPU to manage several tasks at once. It’s made possible through multiprocessing.

Hyperthreading and Multithreading

Hyperthreading turns one CPU core into two virtual cores, enhancing performance. Multithreading refers to the ability of a CPU to process multiple threads of instructions simultaneously.

Memory Management Unit (MMU)

The MMU handles the flow of data between the CPU and RAM. It also manages virtual memory, improving the efficiency of physical memory usage.

Understanding CPU Instruction Cycles

To grasp how CPU function carry out tasks, understanding their instruction cycles is key. Here’s an overview of the CPU instruction cycles you should know.

The Basic CPU Instruction Cycle

The CPU follows a cyclical process, composing three main actions: fetch, decode, and execute. Initially, the CPU fetches the next instruction from memory. Then it decodes the instruction to understand what action to perform. Finally, the CPU executes the instruction, completing the task at hand.

Improving Efficiency with Overlapping Cycles

CPUs boost efficiency by overlapping instruction cycles. While one instruction is being executed, the next one is fetched. This means different parts of the CPU are simultaneously active. Overlapping cycles prevent idle time, allowing for continuous processing.

Supercharging with Hyperthreading

Hyperthreading takes efficiency further. It allows a single core to manage two instruction streams at once. This means if one stream is waiting, the other keeps going. Hyperthreading can effectively double the processing capacity of a CPU core, improving multitasking and speed.

Exploring CPU Terminology

Understanding CPU terms is essential for grasping computer function.

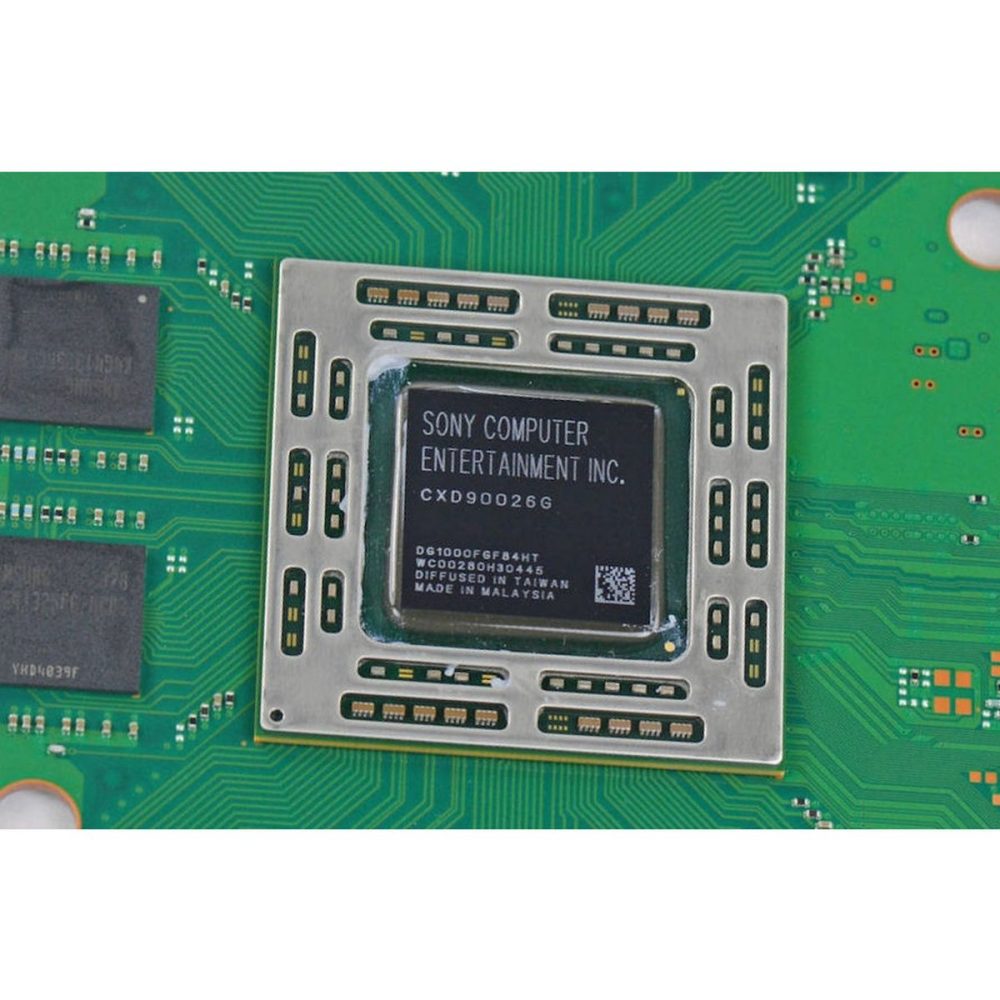

Distinguishing Between Cores, CPUs, and Sockets

In CPU talk, “cores” are the basic work units within a CPU. Each core can process data independently, allowing for multitasking. A “CPU,” or central processing unit, may have multiple cores, enabling it to handle various tasks at once. “Sockets” refer to the spots on a motherboard where CPU chips are installed. Each has unique features fitting the motherboard.

When we combine cores into one unit, we get a CPU package, often just called a CPU. This package fits into the motherboard socket. A core with hyperthreading can do the job of two by running dual instruction sets. So, a chip with six cores might work like twelve. You’ll hear terms like “single-core,” “dual-core,” or “quad-core” CPUs, reflecting the number of cores.

Decoding the Output of ‘lscpu’ Command

The ‘lscpu’ command in Linux tells us CPU function details. It shows architecture, the number of CPUs, and more. When we run ‘lscpu,’ we see the number of threads per core, which tells us if hyperthreading is active. We also see core counts per socket and total sockets, meaning the number of chips on the motherboard. Cache info is also there, showing how much quick-access memory is available.

Decoding ‘lscpu’ helps us understand the CPU’s build. It tells us what our machine can handle, from simple tasks to complex operations. It’s a snapshot of the system’s processing power. Knowing this, we can match software with the right hardware, ensuring smooth performance.

The Impact of CPU on System Performance

The performance of a computer system is heavily influenced by the CPU. This is because the CPU is responsible for executing instructions and processing data. How quickly and efficiently a CPU function can perform these tasks directly impacts system performance, including application response times and the system’s ability to handle concurrent tasks.

CPU Cache Hierarchy and Data Flow

The CPU cache is a tiered system that works to streamline data flow. It consists of multiple levels—L1, L2, and L3—that store data and instructions close to the CPU. The L1 cache is the fastest and smallest, directly connected to the CPU. Next, the L2 cache is larger, but slightly slower. The L3 is shared among cores and balances size and speed.

When the CPU needs data, it first checks the L1 cache, then the L2, and finally the L3 cache before reaching out to the slower RAM. This hierarchy ensures the most-used data is quickly accessible to the CPU, reducing the need to fetch data from the main memory. This decreases the wait time for information, thereby improving overall system responsiveness and performance.

Interplay Between CPU and RAM

RAM serves as the primary storage from which the CPU fetches data for processing. However, if the CPU had to access the RAM for every operation, performance would suffer due to the slower speed of RAM compared to CPU caches. To mitigate this, CPUs utilize their cache system to reduce the frequency of RAM access, speeding up instruction execution and data processing.

The collaboration between CPU and RAM is critical. While the CPU handles the active tasks, RAM holds the programs and data that are in use. Efficient data transfer between RAM and the CPU’s caches is vital for system stability and speed. A CPU with a larger and faster cache can handle more data and reduce its reliance on RAM, which translates to faster system performance.

In conclusion, the CPU’s efficiency, supported by its cache system and interaction with RAM, is fundamental in defining a computer’s capabilities to execute tasks seamlessly and maintain optimal performance.

Conclusion and Final Thoughts on CPU Function

After exploring the intricate details of CPU function, it’s clear this component is the epicenter of computing power. The synergy of its elements, like the CU and ALU, and the finesse required for instruction cycles, are pivotal for a computer’s operation. Enhancements such as cache hierarchy and hyperthreading elevate its capability, allowing for swift multitasking and robust performance.

A deeper grasp of CPU intricacies lets us appreciate the advanced nature of modern computing. Each leap in CPU technology extends a system’s ability to run complex software with agility. From fetching to executing instructions, every CPU function plays a vital role in how we interact with technology daily.

While keywords like cores, caches, and clocks may seem daunting, they all converge to create a seamless user experience. Maintaining a system’s peak performance relies on the CPU’s smooth coordination with memory resources, from on-die caches to system RAM. This harmony is crucial for our expectations of instantaneous computing responses.

Protocols for handling interrupts, managing program flows, and optimizing data retrieval define the CPU as more than hardware; they underline its essence in contemporary digital life. Understanding the CPU’s capability lets us tailor our hardware choices to our computing needs. Thus, appreciating CPU function is not only about recognizing the limits of technology but also about pushing boundaries to innovate and improve.

As we continue to witness advancements in CPU design, from increased core counts to sophisticated power management, one thing remains constant: the centrality of the CPU in shaping our computing experiences. It is the heartbeat of a world increasingly powered by digital innovation. Glancing towards the horizon of technology, the CPU will undoubtedly continue to be a cornerstone of computation, ever-evolving to meet the demands of the future.